The EU AI Act – Are You Ready for the First Enforceable Requirements?

As of Sunday 2 February 2025, the first provisions of the EU AI Act will apply. Companies are required to have an adequate level of AI education as well as to ensure that prohibited AI systems are not developed or deployed. In this article, we aim to clarify what governance, risk, and compliance requirements these provisions entail. Are you ready for the first enforceable requirements?

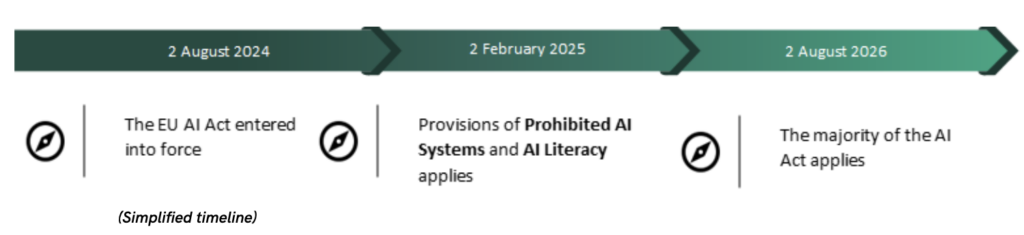

The AI Act Timeline

The AI Act was formally adopted July 2024 and entered into force in August 2024. The application of the AI Act – i.e. that the act becomes a compliance requirement and formally enforceable – is divided between different deadlines. As of 2 February, the first two provisions become applicable:

Prohibited AI Systems – The Requirement of a System Inventory

AI systems with an unacceptable risk is prohibited effective 2 February. The penalties for non-compliance are significant, reaching up to €35 million or 7 % of the company’s global annual turnover in the previous financial year.

Examples of prohibited AI systems include:

- Subliminal, manipulative, or deceptive techniques to distort behaviour and impair informed decision-making, causing significant harm

- Exploiting vulnerabilities related to age, disability, or socio-economic circumstances to distort behaviour, causing significant harm

- Social scoring, i.e., evaluating or classifying individuals or groups based on social behaviour or personal traits, causing detrimental or unfavourable treatment of those people

- Assessing the risk of an individual committing criminal offenses solely based on profiling or personality traits, except when used to augment human assessments based on objective, verifiable facts directly linked to criminal activity

- Inferring emotions in workplaces or educational institutions, except for medical or safety reasons

To be able to ensure compliance, it is recommended that companies maintain an up-to-date system inventory of AI systems. Although not an explicit requirement per se, such a system inventory is a necessary tool for to keep track of any AI systems used or developed and the corresponding governance, risk management, and compliance requirements.

As with all newly enacted pieces of legislation, the AI Act comes with plenty of room for interpretation. For example, what constitutes an AI system is far from crystal clear. In November 2024, the EU AI Office invited organizations that provide or deploy AI systems, as well as a range of other concerned stakeholders, to answer questions relating to prohibited practices established. These questions include the need for further clarification and examples are needed as to whether an AI system is in the scope of the prohibition or not. Based on the responses received, the EU AI Office will develop guidelines intended to help providers and deployers achieve and demonstrate compliance. The Guidelines are due to be adopted in “early 2025.”

AI Literacy – The Requirement of an AI Education Program

Simply put, companies have an obligation to ensure that their staff is sufficiently educated in the operation and use of AI systems. This obligation is contextual, i.e. depending on the associated risk-level of AI systems and the context of their use. The AI Act goes beyond regulatory alignment. It aims to mitigate risks such as unauthorized data exposure, biased outputs, or hallucinations.

For example, developers who are not aware of security risks might accidentally share sensitive code or data with and AI system and thereby unlawfully disclosing trade secrets to a third party. Recruitment staff using AI may overlook potential biases in the AI’s recommendations.

AI Literacy forms one of the key components of an AI Governance program. As a rule, the establishment of AI Literacy program is divided into three general parts:

- Define AI Skills needed. The first step is to define the required level of AI Literacy depending on, inter alia, whether the company uses of develops AI systems and the associated risk-level of AI systems

- Assess the Staff’s Current AI Skills. The second step is to assess the company’s current know-how

- Establish a level-based AI Literacy Program. Provide AI education at multiple levels—from hygiene-level AI literacy for all staff to advanced training for technical as well as governance, risk, and compliance functions. Depending on the required level of AI knowledge, recruitment might be necessary

The relevant penalty provision of administrative fines does not apply before August 2025. With that said, non-compliance may still be taken into account in the context of liability claims as well as in the assessment of compliance of intersecting legal acts such as for example the EU GDPR. The GDPR requires ensure appropriate organisation measures such as assignment of training of staff when processing personal data.

Summary

On the surface, the requirements applicable as of 2 February 2025 can be construed as minor. In practice, these requirements are a stepping-stone-by-design before the majority of the AI Act becomes applicable in August 2026. By maintaining an up-to-date system inventory and establishing an appropriate and proportional education program, these requirements and not “compliance checkboxes” but rather intended by the legislator as early steps of the AI Governance journey.

Learn more about the AI Act and our offerings in Data Privacy and Cyber & Digital Risk services.