Management of interconnected risks and operational risk centrality

Estimating and limiting an organization’s exposure to operational risk is a challenge for many and today a large part of the work performed in this area is qualitative. But by leveraging the information they gather, to simulate and visualize their risk exposure from a new perspective, organizations get the opportunity to make informed data-driven decisions in their risk work.

This gives organizations the opportunity to identify which are the biggest operational risks, as well as to identify and focus on the controls that have the greatest effect and that are most important according to the risk appetite for the company. Centrality analysis is also a method for working against chain developments of interconnected risks and thus limiting the consequence when bad luck strikes.

Centrality provides a new perspective on the inherent risks in an organization

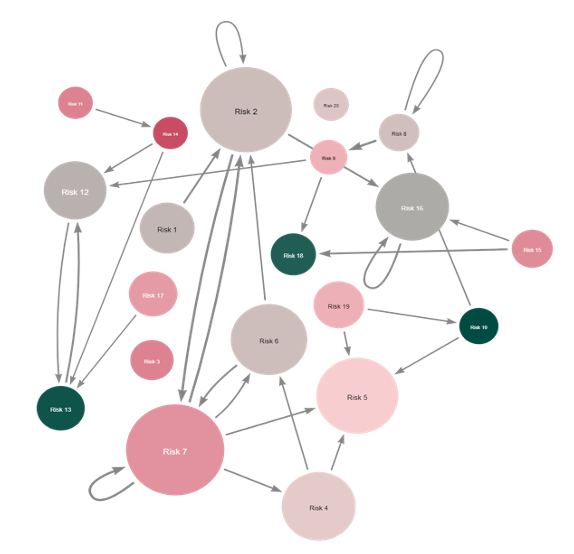

FCG’s article “Management of interconnected risks and centrality within operational risk” presents examples of how the operational risks in an organization are modeled using network analysis regarding centrality. This is a method for a company to visualize and quantify the links between identified risks within the organization. The centrality valuation can be simulated against the organization’s Value at Risk, which leads to an increased understanding of the loss exposure. This information allows for a more accurate assessment of which risks that are greatest and can be used as supporting information when deciding which controls should be prioritized.

Traditionally, the relationships between a company’s various risks are estimated and illustrated in their risk registers in the form of a matrix. This matrix is a directed adjacency matrix and is used as input in a centrality analysis. The value assigned to each risk is a mutual ranking of how often a particular risk is in jeopardy of occurring as a chain effect of another risk occurring.

Simulations enable an organization to gain insight regarding the impact of controls before they are introduced

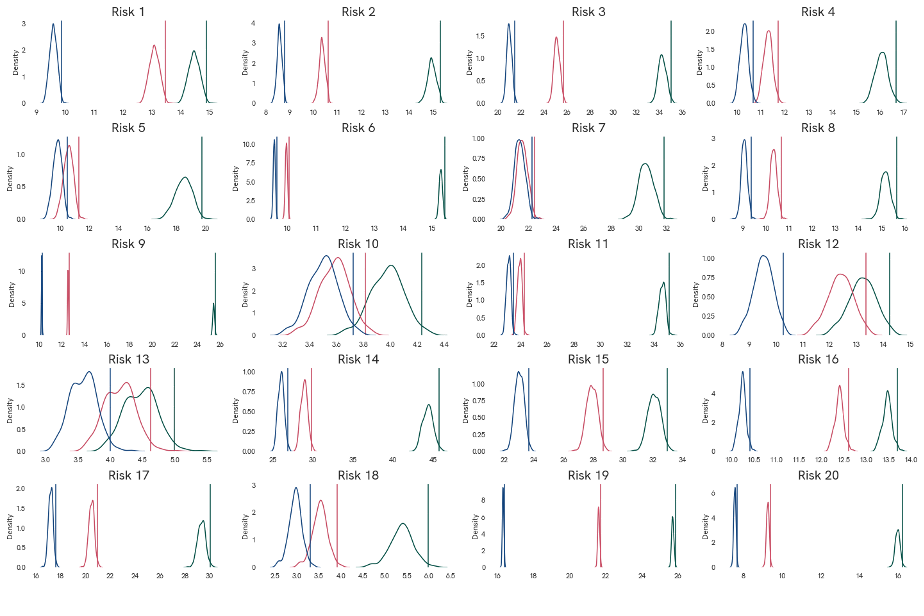

It is not only “how the risks are linked” that is important for an organization when prioritizing controls with regard to the organization’s risk appetite, but also the effect an introduced control is expected to have. Most organizations today do not have data of sufficiently good quality and quantity to be used as a basis for conducting a simulation.

However, by taking advantage of the expert estimates made for: the frequency of the respective risk, the loss of each risk, as well as the efficiency the control is expected to have during the scenario analysis, it is possible to simulate the expected loss for each risk. The result of such a simulation is illustrated in the image below.

By leveraging both centrality and simulation, it is possible to make informed decisions to limit risk exposure

Estimating and limiting an organization’s exposure to operational risk is a challenge for many and today a large part of the work in this area is qualitative. But by leveraging the information they gather to simulate and visualize their risk exposure from a new perspective, organizations get the opportunity to make informed data-driven decisions in their risk work.

This gives organizations the opportunity to identify which are the biggest operational risks, as well as to identify and focus on the controls that have the greatest effect and that are most important according to the risk appetite of the company. Centrality analysis is also a method for working against chain developments of interconnected risks and thus limiting the consequence when bad luck arrives.

For more information, please contact Jim Gustafsson: